Precompressed HTML at the Edge: Eleventy Meets Cloudflare Workers

Introduction

In 2025, I wrote a series of web performance optimisation blog posts focussing on some of the key fundamental's of Frontend Web Performance:

Caching

Asset fingerprinting and the preload response header in 11ty

Summary

The blog post describes how the I enhanced web performance on my 11ty-built site by combining asset fingerprinting with the HTTP preload hint.

It explains that preload tells the browser to fetch critical resources earlier, but hashed filenames make this difficult to manage manually.

The solution was to generate preload Link headers automatically during the 11ty build. A custom script locates the fingerprinted CSS file and injects the correct preload header into the Cloudflare Pages _headers file.

This speeds up CSS delivery, removes the need for manual updates, and allows the use of long-lived Cache-Control header values such as max-age=31536000 and immutable.

Compression

Cranking Brotli up to 11 with Cloudflare Pro and 11ty.

Summary

The blog post explains how I improved the performance of my 11ty-powered blog after migrating to Cloudflare Pages by using Brotli compression at the highest level (11) for static assets. I also describes the difference between Brotli and gzip, outline how Cloudflare’s Pro plan typically applies a moderate Brotli level (4), and then show how to pre-compress JavaScript files to Brotli level 11. Lastly, serve them correctly both locally and via Cloudflare, and configure Cloudflare’s compression rules so that all assets benefit from the stronger compression to reduce file sizes and improve load performance.

Concatenation

Using an 11ty Shortcode to craft a custom CSS pipeline

Summary

While not strictly about concatenation, it covers related ideas. The post explains how I built a custom CSS pipeline for my 11ty site using a bespoke short code rather than the default bundle plugin.

It details how I preserved local live reload, added content-based fingerprinting for long-term caching, minified CSS with clean-css package, enabled Brotli compression, and used disk and memory caching to prevent unnecessary work.

The build also generates hashed filenames and matching HTML link tags, ensuring production serves fully optimised, cache friendly CSS automatically.

More compression

In this blog post, I’m going to take the compression a little further. I already have CSS and JavaScript Brotli compressed to the highest level (11) and served from Cloudflare Pages. But what about the third and final core technology of the web? Arguably the most important too… The HTML. In this post, I will look at how to compress your HTML to 11 during the 11ty build phase, and what modern technologies you need in order to make this work (it’s not as straightforward as I thought!)

Why HTML Brotli matters?

Before we dive into the details, let’s discuss why Brotli compression is important for HTML. Well, to summarise Brotli compression in one sentence:

Brotli compression reduces file size by intelligently identifying repeated patterns in data and encoding them more efficiently using a combination of modern compression techniques.

This is fantastic for anything with repeating patterns as the bytes saved over the network can be huge! The great thing about HTML is that it has a tonne of repeating patterns (e.g. Markup)! This reduction in file size could potentially equate to:

Improved Web Performance

- Lower Time To First Byte impact on slow networks

- Improved First Contentful Paint

- Improved Largest Contentful Paint

- Reduced Total Blocking Time (indirectly)

Every kilobyte saved here multiplies across your traffic volume.

At scale, cost savings add up

Let’s assume you are serving a high traffic website, say the BBC, Google, or GOV.UK. Imagine how much bandwidth could be saved by simply compressing your HTML. Any percentage saving on millions of requests per day is going to mean:

- Less bandwidth

- Lower CDN egress

- Lower cloud costs

- Lower carbon footprint

This is a win both commercially and environmentally!

Improved performance on slow and unstable mobile networks

Brotli 11 improves the web for users where they need it the most:

- 3G connectivity

- High latency rural connections

- Congested public networks

- International access

HTML blocks everything else. If you shrink it aggressively, you unblock the page faster, meaning users get a better experience.

This is a real-world performance gain.

It indirectly improves Core Web Vitals

Smaller HTML means:

- Faster DOM construction

- Earlier CSS discovery

- Earlier JS discovery

- Reduced main thread idle gaps

This is especially important for server rendered pages or hybrid Server-side Rendered apps.

Compression cost is paid once for static assets

Yes, level 11 compression is expensive to compute. But this cost is paid back over time. You pay for the CPU time once. Users benefit forever assuming:

- the HTML is static and cacheable at the Content Delivery Network (CDN) using long-life caching headers

- you automate and pre-compress the HTML at build time

Compression Size Examples v1

Let’s have a look at a huge HTML page on the web. My go-to for this is either the W3C HTML5 Specification page OR NASA’s Astronomy Picture of the Day Archive.

Rather than choose, let’s just compress both!

W3C HTML5 Specification (Single Page)

- URL: https://www.w3.org/TR/2011/WD-html5-20110405/Overview.html

- Uncompressed size: 4.7 MB

- Brotli (Level 11): 590 KB (~88% saving)

That’s an 88 percent reduction in bytes over the network when compressing the HTML with Brotli 11. Not bad for something that takes just over 10-seconds to run.

And yes, that’s 4.7 MB of HTML alone. It’s an absolute beast of a page.

For perspective, that would take around 12 to 15 minutes to download on a 56k modem in the late 90s. Just for the HTML. I might be showing my age here 😭

Nasa’s Astronomy Picture of the Day Archive

- URL: https://apod.nasa.gov/apod/archivepix.html

- Uncompressed size: 314 KB

- Brotli (Level 11): 53 KB (83% saving)

We’ve taken it from roughly a third of a megabyte down to just 53 KB. That is a pretty substantial reduction!

This means faster page loads, lower data usage, and a much smoother experience for anyone on a limited data plan or dealing with patchy connections. A very positive impact, right where it matters most for users.

How is this different from my other compression posts?

So how is this different from the Brotli compression I have mentioned in the previous blog posts?

In Cranking Brotli up to 11 with Cloudflare Pro and 11ty I used a combination of:

- Brotli CLI (e.g.

brew install brotli) - bash scripts (

compress.sh,compress-directory.sh)

In this post, I override the Cloudflare Dashboard's "Compression Rules” (which dynamically compresses HTML at Brotli level 4). The CDN is compressing the HTML on-the-fly when the user’s browser requests it.

What this blog post describes is pre-compression to Brotli 11 at 11ty build time. To do this the workflow is:

- Apply Brotli level 11 pre-compression to HTML during the 11ty build on Cloudflare Pages.

_helpers/html-compression.jsruns after the site is written to_site.- Each HTML file gets a matching

.brfile - Use the built-in Node

zlibmodule, so no manual setup, CLI tools, scripts, Cloudflare configuration, or extra dependencies are required. - Take advantage of Cloudflare Pages Functions, which run on Cloudflare Workers. This is a great opportunity to use a modern, fast evolving platform that opens the door to powerful edge capabilities.

What’s the point?

Well, that’s a great question. As some readers may know by default Cloudflare compresses HTML at compression level 4. Now, if we compare this level to the compression level 11 above, let’s have a look at the difference:

Compression Size Examples v2

W3C HTML5 Specification (Single Page)

- URL: https://www.w3.org/TR/2011/WD-html5-20110405/Overview.html

- Uncompressed size: 4.7 MB

- Brotli (Level 4): 729 KB (83% saving)

- Compression Time (Level 4): 0.149 seconds

- Brotli (Level 11): 590 KB (87% saving)

- Brotli (Level 11): 11.717 seconds

Nasa’s Astronomy Picture of the Day Archive

- URL: https://apod.nasa.gov/apod/archivepix.html

- Uncompressed size: 314 KB

- Brotli (Level 4): 66 KB (79%)

- Compression Time (Level 4): 0.011 seconds

- Brotli (Level 11): 53 KB (83% saving)

- Brotli (Level 11): 0.743 seconds

It’s worth noting that I used Paul Calvano's fantastic Compression Tester Tool, to help with this basic analysis.

What you will likely notice is that even for large HTML files the size difference between compression level 4 and compression level 11 isn’t huge, only 12 KB in the W3C HTML5 Specification example. The real difference comes in computation time. Level 4 0.149 seconds verses 11.717 seconds! That’s a 7764% increase in time between Level 4 and Level 11! Although this is an extreme example, you can probably see why Level 11 isn’t used by Cloudflare for on-the-fly compression of HTML assets. Level 4 gives a good balence between file compression versus compression speed. I’m betting countless smart people were involved in the analysis of using Level 4 by default! When you are a company that is literally serving billions of requests per second, this decision really makes a difference in terms of processing power infrastructure and power usage!

Thankfully, the way that I have implemented level 11 compression on my blog, processing time doesn’t really matter. All the HTML is being compressed at 11ty build time. As I said above, the cost of this additional CPU time is paid back over time by users getting a better experience (even if only slightly). Furthermore, remember there’s a slight reduction in storage required on the CDN. From my perspective, if it is low effort after setup, it feels like the right move to implement it.

Problems

As I found out during implementation it isn’t just as simple as compressing the HTML to 11 and setting a static Content-Encoding header for HTML in the _headers file (trust me, I tried it!)

Problem 1: URL Path vs Actual File Path Mismatch

When serving static assets like CSS, JS, or images, there is usually a simple one-to-one relationship between the URL and the file on disk. A request to /css/site.css maps directly to _site/css/site.css. No extra logic is required because the URL path matches the file path exactly. I had no idea, but I soon found out that HTML pages behave differently. A request to / or /blog/post/ does not correspond to a literal file at that path. Instead, the server applies a convention and serves index.html inside that directory. So /blog/post/ actually maps to blog/post/index.html on disk. This mapping happens automatically when Cloudflare serves uncompressed HTML through its very efficient static asset layer.

The problem appears when serving pre-compressed Brotli files. You cannot simply request the same URL and expect the .br file to resolve. Instead, the Cloudflare Function must manually translate the directory style URL into the real file path before appending .br.

For example, / becomes /index.html.br, /blog/post/ becomes /blog/post/index.html.br, and /404.html becomes /404.html.br.

In short, HTML requires explicit path translation because the browser sees a directory style URL while the actual file stored on disk is index.html. The Cloudflare Function must bridge that gap to correctly serve the Brotli 11 compressed version, rather than the uncompressed HTML version.

It actually makes sense now that I think about it, I’ve always just taken the automatic appending of index.html to a URL Path for granted! The fact that as a user on the web doesn’t even have to think about that small detail, shows how well it works! As Dieter Rams once said:

Good design is as little design as possible.

Problem 2: A page full of Wingdings

I’m showing my age again, but for readers who don’t remember early versions of Windows (e.g. 3.1), it came bundled with a font called Wingdings. This True Type font contains many largely recognised shapes and gestures as well as some recognised world symbols.

Wingdings were an early symbol font that experimented with pictographic digital symbols, that would later lead on to ASCII emoticons like :-) & ¯\_(ツ)_/¯, which in turn would progress to the modern world of Emoji’s!

Essentially, what was happening the Cloudflare server was serving raw Brotli compressed HTML files to the browser, expecting it to understand what these (now binary, not text) files were, and how to read and understand them. I was essentially serving the HTML without the following headers:

Content-Type: text/html; charset=UTF-8

Content-Encoding: br

Vary: Accept-Encoding

Here’s a brief explanation of these headers:

Content-Encoding: br: This is telling the browser “What I’m sending you is a Brotli compressed file, you are going to need to decode it before you understand it”.Content-Type: text/html; charset=UTF-8: This tells the browser what character set ('charset') it should use after decompression, this is essential as this is key to the browser parsing the HTML correctly.Vary: Accept-Encodingonly affects caching (e.g. Content Delivery Networks). It’s basically saying to the cache to store separate versions of this file depending on the Accept-Encoding header.

The result of the above gave me a homepage that looked like the image below (interesting but not exactly readable!):

My Approach

Step 1: Build-time compression

After the site is generated, it moves into a final preparation stage before going live.

During this stage, the system goes through all the finished HTML pages and creates highly compressed versions of them. These compressed files sit alongside the originals and are ready to be served immediately.

Because this happens as part of the 11ty build process, every release automatically includes freshly optimised files. This means the live site can deliver pages faster, with smaller file sizes and no need to compress anything on the fly (e.g. what Cloudflare does with its HTML compression to Brotli level 4).

The result is better performance for users, with no extra overhead once the site is hosted and running on Cloudflare pages.

Step 2: Cloudflare Pages Function for content negotiation

When someone visits a page on the site, a lightweight Cloudflare Function (via a Cloudflare Worker) checks whether a users browser supports modern compression.

If it does, the system serves the Brotli 11 pre compressed version of the page. This keeps file sizes small and pages loading quickly.

If the browser does not support this compression, or if a compressed version is not available, the system simply serves the standard uncompressed version of the HTML instead.

No extra processing happens at this stage. The edge layer (Cloudflare Function + Worker) is only deciding which version of the already prepared files to send. All optimisation work has already been done earlier in the 11ty build process.

The final result is fast delivery, efficient bandwidth use, and a simple, reliable build setup. Everyone wins!

Step 3: The Eleventy build uses Node.js zlib, for seamless integration

As mentioned earlier, this Brotli implementation differs from others I have used. Instead of relying on bash scripts such as compress.sh or compress-directory.sh, it uses Node.js’s built in zlib module. Because zlib is part of Node.js core, it is stable, well maintained, and requires no external dependencies. It has been available since the earliest Node.js releases, so it is a sensible default choice. I may even revisit the other build process in future and consider replacing the remaining bash scripts with a fully Node.js based approach.

Implementation

Next, let’s stop the waffling and get onto the actual implementation, I assume that’s what readers are here for after all!

Core compression utility

Here is the main compression file that is used to compress the HTML to Brotli 11:

/**

* Core compression utility: Brotli compression for build-time pre-compression.

*

* This module is the single source of truth for Brotli in the project. It is used by:

* - html-compression.js — compresses all HTML in _site to .br (level 11)

* - css-manipulation.js — compresses processed CSS to .br when writing to _site

* - js-compression.js — compresses minified JS in _site/js to .br

*

* All compression happens during the Eleventy production build (postbuild phase).

* Pre-compressed .br files are then served via content negotiation in functions/[[path]].js

* when the client sends Accept-Encoding: br, avoiding any runtime or CDN dynamic compression.

*/

import { brotliCompressSync } from 'zlib';

/**

* Default Brotli compression level.

*

* Brotli levels range from 0 to 11:

* - 0–3: Fast, lower ratio (typical for dynamic/on-the-fly compression; e.g. CDNs often use 4).

* - 11: Maximum ratio, slowest; ideal for static assets compressed once at build time.

*

* We use 11 because compression runs only during the build, so CPU cost is paid once per

* deploy. The resulting .br files are then served as-is with no re-compression at the edge.

*/

export const BROTLI_LEVEL = 11;

/**

* Compress input data with Brotli.

*

* Uses Node's synchronous zlib API so callers can use the function in a simple, blocking

* way during the build (no need to await). Input is normalized to a Buffer so that

* strings (e.g. file contents read as UTF-8) and TypedArrays are supported.

*

* @param {Buffer | Uint8Array | string} input - Data to compress. If a string, it is

* encoded as UTF-8. Buffers and Uint8Array are used as-is (after copying to a Buffer

* when necessary via Buffer.from).

* @param {number} [level=BROTLI_LEVEL] - Compression level 0–11. Defaults to 11 for

* maximum ratio. Can be overridden (e.g. via BROTLI_COMPRESSION_LEVEL in css-manipulation).

* @returns {Buffer} - Compressed data as a Buffer. Callers typically write this to a

* file with a .br extension (e.g. index.html.br).

*/

export function brotliCompress(input, level = BROTLI_LEVEL) {

const buffer = Buffer.isBuffer(input) ? input : Buffer.from(input);

return brotliCompressSync(buffer, { level });

}I appreciate that not everyone prefers heavily commented code, so there is also a version in this Gist with minimal comments and improved readability.

HTML compression post-build

The post-build HTML compression file:

/**

* HTML Brotli compression (postbuild).

*

* This module compresses every HTML file in the Eleventy output directory (_site) to

* Brotli level 11 and writes a corresponding .br file alongside each .html file

* (e.g. index.html → index.html.br, blog/post/index.html → blog/post/index.html.br).

*

* When it runs:

* Only during the production postbuild phase, after Eleventy has finished writing

* _site. It is invoked from _config/build-events.js in the eleventy.after handler

* (alongside JS minification, JS Brotli, and preload header generation).

*

* How .br files are served:

* The Cloudflare Pages Function functions/[[path]].js performs content negotiation

* for HTML document requests. When the client sends Accept-Encoding: br, the Function

* fetches the pre-built .br asset (e.g. / → index.html.br) and returns it with

* Content-Encoding: br. Clients that do not advertise Brotli support receive the

* uncompressed .html from the static asset bucket. No dynamic compression runs at

* the edge; this step does all compression once at build time.

*

* Uses the core compression utility _helpers/compression.js for the actual Brotli call.

*/

import fs from 'fs';

import path from 'path';

import { brotliCompress, BROTLI_LEVEL } from './compression.js';

/**

* Recursively find all .html files under a directory.

*

* Used to discover every HTML file in _site (root index.html, blog posts, portfolio

* items, 404.html, etc.). Paths are returned as relative to _site so that we can

* join them with siteDir for full paths and still have readable relative paths for

* logging and error messages.

*

* @param {string} dir - Absolute or relative directory path to search (e.g. ./_site or a subdir).

* @param {string[]} [acc=[]] - Accumulator array; results are pushed here during recursion.

* @returns {string[]} Relative paths to .html files (e.g. ['index.html', 'blog/post/index.html']).

*/

function findHtmlFiles(dir, acc = []) {

const entries = fs.readdirSync(dir, { withFileTypes: true });

for (const entry of entries) {

const fullPath = path.join(dir, entry.name);

const relPath = path.relative('./_site', fullPath);

if (entry.isDirectory()) {

findHtmlFiles(fullPath, acc);

} else if (entry.isFile() && entry.name.endsWith('.html')) {

acc.push(relPath);

}

}

return acc;

}

/**

* Compress all HTML files in _site with Brotli level 11, writing .br files.

*

* Call this only after the Eleventy build has completed and _site is fully written.

* For each .html file: reads content, compresses with brotliCompress (level 11),

* writes <filename>.br next to the original. Existing .br files are skipped if their

* mtime is >= the source .html mtime (incremental safety; in a full build all HTML is

* usually newer, so most files are compressed). Logs counts, total bytes saved, and

* duration; collects per-file errors without stopping the loop.

*/

export function compressHtmlFiles() {

const startTime = Date.now();

console.log('\n━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━');

console.log('🚀 PRODUCTION POSTBUILD: Starting HTML Brotli compression');

console.log('━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━\n');

const siteDir = path.join('./_site');

if (!fs.existsSync(siteDir)) {

console.log('⚠️ _site directory not found, skipping HTML compression');

return;

}

const htmlFiles = findHtmlFiles(siteDir);

if (htmlFiles.length === 0) {

console.log('⚠️ No HTML files found, skipping compression');

return;

}

let compressedCount = 0;

let skippedCount = 0;

let totalOriginal = 0;

let totalCompressed = 0;

const errors = [];

for (const relPath of htmlFiles) {

const inputPath = path.join(siteDir, relPath);

const outputPath = `${inputPath}.br`;

try {

// Skip writing if .br already exists and is not older than the source .html

if (fs.existsSync(outputPath)) {

const inputStats = fs.statSync(inputPath);

const outputStats = fs.statSync(outputPath);

if (outputStats.mtime >= inputStats.mtime) {

skippedCount++;

continue;

}

}

const fileContent = fs.readFileSync(inputPath);

const originalSize = fileContent.length;

const brotliBuffer = brotliCompress(fileContent, BROTLI_LEVEL);

const compressedSize = brotliBuffer.length;

fs.writeFileSync(outputPath, brotliBuffer);

compressedCount++;

totalOriginal += originalSize;

totalCompressed += compressedSize;

} catch (error) {

errors.push(`❌ ${relPath}: ${error.message}`);

}

}

const totalTime = Date.now() - startTime;

const savedPercent = totalOriginal > 0 ? ((1 - totalCompressed / totalOriginal) * 100).toFixed(1) : '0';

console.log(`✅ Compressed ${compressedCount} HTML file(s) (${skippedCount} skipped, up-to-date)`);

if (compressedCount > 0) {

console.log(

` ${(totalOriginal / 1024).toFixed(1)} KB → ${(totalCompressed / 1024).toFixed(1)} KB (${savedPercent}% smaller)`

);

}

console.log(` Finished in ${totalTime}ms (${(totalTime / 1000).toFixed(2)}s)`);

if (errors.length > 0) {

console.error('\nErrors:');

errors.forEach(e => console.error(` ${e}`));

}

console.log('\n━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━\n');

}A version with minimal comments and much improved readability is available in a Gist here.

Cloudflare Pages Function for content negotiation

Here we have the Cloudflare Function file that runs in the Cloudflare Worker. It is located in the /functions directory in the root of the repository so it can be detected when Cloudflare Pages builds.

/**

* Catch-all Cloudflare Pages Function: HTML document content negotiation.

*

* This Function runs for every GET and HEAD request that is not handled by a more

* specific route (e.g. POST /api/contact is handled by functions/api/contact.js).

* Its job is to serve pre-compressed Brotli (.br) HTML when the client supports it,

* and otherwise pass through to the static asset bucket (uncompressed HTML).

*

* Pre-compressed .br files are produced at build time by _helpers/html-compression.js

* (postbuild): every .html in _site gets a matching .br (e.g. index.html.br,

* blog/post/index.html.br). This Function does not compress on the fly; it only

* chooses which asset to serve and sets the correct response headers.

*

* Flow:

* 1. If the request is not for an HTML document URL (see below), pass through to ASSETS.

* 2. If the client does not send Accept-Encoding: br, pass through (serve uncompressed HTML).

* 3. Otherwise, fetch the corresponding .br asset; if missing or error, fall back to ASSETS

* for the original request (uncompressed).

* 4. If .br exists, return it with Content-Encoding: br and no-transform so nothing

* re-compresses the body.

*/

async function handleHtmlWithBrotli(request, env) {

const url = new URL(request.url);

const pathname = url.pathname;

// Only treat as HTML document: root (/), directory-style paths ending in /, or 404

// (Cloudflare Pages maps /404 to /404.html). All other paths (e.g. /script.js,

// /style.css, /favicon.ico) go straight to static assets; Pages serves .br for

// static files when available and client sends Accept-Encoding: br.

const isHtmlDocument = pathname === '/' || pathname.endsWith('/') || pathname === '/404.html';

if (!isHtmlDocument) {

return env.ASSETS.fetch(request);

}

const acceptsBrotli = request.headers.get('Accept-Encoding')?.includes('br') ?? false;

if (!acceptsBrotli) {

return env.ASSETS.fetch(request);

}

// Map URL path to the .br file in the asset bucket. Eleventy outputs directory

// indexes as index.html (e.g. /blog/post/ → blog/post/index.html), so the .br

// file is <path>index.html.br.

const brPath =

pathname === '/' ? '/index.html.br' : pathname === '/404.html' ? '/404.html.br' : `${pathname}index.html.br`;

const brUrl = new URL(brPath, url.origin);

const brResponse = await env.ASSETS.fetch(brUrl.toString());

if (!brResponse.ok) {

return env.ASSETS.fetch(request);

}

// Build response from the .br body with headers that declare Brotli and prevent

// any downstream re-compression or transformation.

const headers = new Headers(brResponse.headers);

headers.set('Content-Encoding', 'br');

headers.set('Content-Type', 'text/html; charset=UTF-8');

headers.set('Vary', 'Accept-Encoding');

// no-transform: tells caches (including Cloudflare) not to re-encode or modify the body

headers.set('Cache-Control', 'public, max-age=31536000, no-transform');

return new Response(brResponse.body, {

status: brResponse.status,

headers,

// encodeBody: 'manual' — we are returning the body as-is (already Brotli).

// Without this, the Workers runtime might try to compress the response again.

encodeBody: 'manual',

});

}

export const onRequestGet = async ({ request, env }) => {

return handleHtmlWithBrotli(request, env);

};

export const onRequestHead = async ({ request, env }) => {

return handleHtmlWithBrotli(request, env);

};A version with minimal comments and much better readability is available in a Gist here.

Build lifecycle wiring

This is the main build file I use to build my 11ty blog for production on Cloudflare Pages.

/**

* Build lifecycle wiring: registers Eleventy before/after hooks for production.

*

* This module is the single place where postbuild steps are scheduled. It is loaded

* from the main Eleventy config (e.g. eleventy.config.js), which calls

* registerBuildEvents(eleventyConfig). Handlers run only when env.isLocal is false

* (i.e. production or preview builds); local dev builds skip all of this so that

* _site is left as plain output and the dev server stays fast.

*

* Order of operations:

* - eleventy.before: clear CSS build cache so CSS is regenerated and Brotli-compressed

* from scratch when needed.

* - eleventy.after: run the production postbuild phase in a fixed order:

* 1. generatePreloadHeaders() — write Link headers into _headers for CSS (and .br).

* 2. minifyJavaScriptFiles() — minify JS in _site/js (must run before Brotli).

* 3. compressJavaScriptFiles() — Brotli-compress JS to .br.

* 4. compressHtmlFiles() — Brotli-compress all HTML in _site to .br.

* Then: destroy HTTP/HTTPS global agents to avoid hanging connections, and on

* production only force process.exit(0) after a short delay so the process

* terminates cleanly on CI/Cloudflare Pages.

*/

import env from '../_data/env.js';

import { clearCssBuildCache } from '../_helpers/css-manipulation.js';

import { generatePreloadHeaders } from '../_helpers/header-generator.js';

import { compressHtmlFiles } from '../_helpers/html-compression.js';

import { compressJavaScriptFiles } from '../_helpers/js-compression.js';

import { minifyJavaScriptFiles } from '../_helpers/js-minify.js';

/**

* Register eleventy.before and eleventy.after handlers.

* Only registered when !env.isLocal (production/preview).

* @param {import("@11ty/eleventy").UserConfig} eleventyConfig

*/

export function registerBuildEvents(eleventyConfig) {

if (env.isLocal) {

return;

}

// Before build: clear any cached CSS build artifacts so this run produces fresh

// processed and Brotli-compressed CSS when templates reference CSS.

eleventyConfig.on('eleventy.before', () => {

clearCssBuildCache();

});

eleventyConfig.on('eleventy.after', async () => {

console.log('\n═══════════════════════════════════════════════════════════════════════════════');

console.log('🚀 PRODUCTION POSTBUILD PHASE: Beginning postbuild operations');

console.log('═══════════════════════════════════════════════════════════════════════════════\n');

generatePreloadHeaders();

await minifyJavaScriptFiles();

compressJavaScriptFiles();

compressHtmlFiles();

console.log('\n═══════════════════════════════════════════════════════════════════════════════');

console.log('✅ PRODUCTION POSTBUILD PHASE: All postbuild operations completed');

console.log('═══════════════════════════════════════════════════════════════════════════════\n');

// Tear down Node's default HTTP/HTTPS agents so the process can exit without

// waiting for keep-alive connections to time out (e.g. on Cloudflare Pages CI).

console.log('🔧 Forcing cleanup of HTTP connections and timers...');

await new Promise(resolve => setTimeout(resolve, 100));

try {

const http = await import('http');

const https = await import('https');

if (http.globalAgent) {

http.globalAgent.destroy();

console.log('✅ Destroyed HTTP global agent');

}

if (https.globalAgent) {

https.globalAgent.destroy();

console.log('✅ Destroyed HTTPS global agent');

}

} catch (error) {

console.warn('⚠️ Could not destroy HTTP agents:', error.message);

}

await new Promise(resolve => setTimeout(resolve, 50));

console.log('✅ HTTP connection cleanup completed');

// On production builds only, force exit after a short delay so the runner

// (e.g. Cloudflare Pages) gets a clean exit code and doesn't hang.

if (env.isProd) {

console.log('🏁 Production build complete - forcing process exit in 2 seconds...');

setTimeout(() => {

console.log('👋 Forcing clean exit now');

process.exit(0);

}, 2000);

}

});

}A version with minimal comments and much improved readability is available in a Gist here.

Other Technical details worth mentioning

Here are 4 other small technical details worth explaining as part of the implementation:

Why encodeBody: 'manual' is required

The content we send back to the user's browser is already compressed using Brotli. It is being loaded from a file that has already been compressed in advance.

If we don't tell Cloudflare to leave it alone, it may assume the content is not compressed and try to compress it again. Compressing something that is already compressed can cause problems and may result in a broken or unreadable output, plus it is a waste of CPU time and resources.

By setting encodeBody: ‘manual', we are telling Cloudflare to send the content exactly as it is, without changing it. This ensures the pre-compressed file is delivered correctly to the user's browser.

Why Vary: Accept-Encoding and Cache-Control: no-transform matter

These 2 response headers are critical for making the pre-compression work. I've given details as to why that is below:

Vary: Accept-Encoding: This notifies browsers and CDNs that the response can change depending on what kind of compression the browser supports.

For example, if a browser says it supports Brotli, the cache will store and return the Brotli version for those requests. If another browser doesn't support Brotli, the cache will store and return a different version, such as an uncompressed version. This prevents the wrong format being sent to the wrong browser.

Cache-Control: no-transform: This informs caches and other systems between the server and the user's browser not to modify the content. It asserts that the response should not be compressed again or altered in any way.

Without this setting, a proxy might try to compress the content again, which can cause errors and waste processing power. With this header in place, the already compressed file is stored and delivered exactly as intended.

Incremental build optimisation (runtime check to skip unchanged files)

After the Eleventy build finishes, the post-build step recursively scans through the output folder, such as the _site directory, and checks each HTML file.

Before compressing a file, it checks whether a matching .br file already exists and whether it is up-to-date. If the .br file is the same age or newer than the original file, it is skipped. If the page is new or has been updated, a fresh compressed version is created.

This avoids pages that have not changed being needlessly recompressed, keeping the post build step fast. When only a few pages are updated, the need for recompression is limited.

Why we need a Cloudflare Function instead of just the _headers file for HTML Brotli?

1. _headers can only change headers, not the file itself

The _headers file lets us add or modify (but not remove) response headers. It doesn't control which file is actually sent back to the browser.

So, when someone visits / or /blog/post/, Cloudflare Pages automatically serves index.html or blog/post/index.html.

If we want to serve the Brotli version of the HTML, we need to send index.html.br instead. But the _headers file has no way to switch the file being served.

2. Setting the header alone is not enough

Even if you add Content-Encoding: br in the static _headers file, the actual file being sent would still be the normal uncompressed version of the HTML.

The browser would see this Brotli header and try to decompress the response. Since the content being sent isn't compressed, it would simply fail and the page would break.

Results

Looking at my build logs I can now see that compressing the HTML to Brotli 11 has had the following results:

📊 HTML Brotli 11 total savings: 123.4 KB (75.2% reduction)

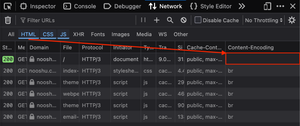

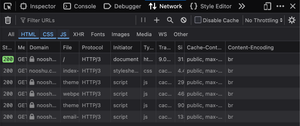

That’s not too bad a saving considering how simple it is to set up and integrate into the 11ty build process! Thankfully, now that it’s done I can just “set it and forget it!”. Let's examine the results from the DevTools Network panel below:

DevTools Before

DevTools After

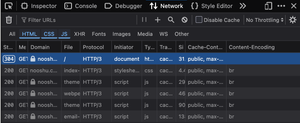

DevTools After Page Reload

Curiously, when reloading the page with DevTools open, the HTTP status code changes from 200 to 304 and the Brotli compression in the Content-Encoding: br disappears. The reason for this is because either:

- The reload request doesn’t contain an

Accept-Encoding: brheader so the Cloudflare Worker is simply returning the uncompressed version of the HTML file as is expected. - The 304 has no response body, so there’s nothing to show as being compressed.

Summary

By pre-compressing this blog’s HTML during the 11ty build phase, I have reduced the number of bytes sent on each page load. While the savings are small for a low traffic site like mine, at scale across millions of users and billions of requests per day, this approach could deliver meaningful bandwidth reductions and incremental performance improvements. This is especially true where network speed and stability vary globally.

Thank you for reading, I hope you found it useful. Edge Workers are an incredibly powerful technology. I genuinely look forward to using them again in the future.

I always open to feedback and corrections. If you spot anything that needs fixing or is incorrect, please let me know. I will credit you in the post changelog below.

Post changelog:

- 21/02/26: Initial post published.